NVIDIA DGX: What Are Its Key Features?

What Types of AI Workloads Can NVIDIA DGX Handle?

The NVIDIA DGX systems are able to take on many kinds of AI uses. They enable cost-effective performance for several specific workloads that include: For more information about workflows please see section 4.2 below.

- Deep Learning: Best fit when one needs to train fully connected neural networks with many parameters on huge datasets in a fast and accurate manner.

- Machine Learning: Ability to run a number of models/algorithms appropriately, making it possible to process data and conduct fast predictions easily.

- Data Analytics: Speeds up the activities of data cleansing, feature engineering, and statistical modeling in order to draw conclusions from large amounts of data as quickly as possible.

- HPC: Acts as an engine for modeling, analyzing, and designing complex tasks that require a lot of computation resources.

- Inference: Enhances the capacity of decision-making operations by making use of already trained models made available in a certain work area for ‘use'.

If you are looking for more information about hgx/dgx products go here right away

How Does the DGX System Optimize Performance for AI Applications?

The DGX system incorporates various strategies in order to enhance the performance for AI workloads:

- Integration of NVIDIA GPUs: Implements the use of high-performance NVIDIA GPUs to allow for fast and orderly completion of processing tasks.

- NVLink Technology: Allows Dense interconnections between multiple GPUs with high bandwidth and low latency.

- Optimized Software Stack: Contains ready to use CUDA-ready components for a faster increase of developer's setup than they would have without optimally configured components from scratch.

- AI-Optimized Storage Solutions: Guarantees quick and efficient retrieval and organization of data to avoid potential bottlenecks and do maximum I/O operations.

- Advanced Cooling Solutions: Ensures maintenance of high quality and reliability under heavy workloads and computational stress by using heat management tools that control the temperatures.

View Fibermall for More Details

What Is the Role of NVIDIA GPUs in DGX Servers?

The performance of DGX servers hinges on NVIDIA GPUs as their performance components. The merits include;

- Parallel and Matrix capabilities: Makes available thousands of cores that are able to execute many different operations at the same time thereby increasing the rate at which complex calculations are performed.

- Tensor Core Technology: Increase efficiency and throughput for deep learning and inferencing processes.

- Precision and Performance: Engages in so-called mixed-precision computing, reduces and increases accuracy and performance optimization of AI model training and inference.

- Scalability: Provides capability of horizontal and vertical load scaling thereby ensuring efficiency and flexibility in resource use.

- Energy Efficiency: Enables high performance per watt in activities leading to reduction of operational costs and energy usage in data centers.

NVIDIA HGX: Understanding the Platform

What Makes the HGX Platform Ideal for Data Centers?

The HGX platform has been designed to easily adapt to the requirements data centers place on new technology:

- High Performance: HGX integrates the latest NVIDIA GPUs, which deliver great power, essential for multifaceted AI workloads and high-performance computing (HPC) tasks.

- Efficient Utilization of Resources: Hardware resources are well utilized in the organization with the aim of maximizing end-to end efficiency and reducing on the latency via bandwidth interconnects and sophisticated software stacks.

- Scalability: It makes provisions to add onto the already-existing resources with little disruption on the performance of the data center resources as per the growth in the needs.

- Reliability: Built for optimal performance and availability under sustained loads with novel cooling technologies and sturdy materials in a hostile operating environment 24 x 7 critical load operations of the data center.

How Does HGX Provide Flexibility for AI Infrastructure?

Thanks to several great technological breakthroughs, HGX offers unbeatable flexibility for AI infrastructure:

- Modular Architecure: Makes upgrades and scaling simple so that data centers can fit new technology and extend the current setup without missing a beat.

- AI Framework Inclusiveness: Comes with libraries of optimized AI frameworks, making it portable and easily integrated with many kinds of apps development environments.

- MIG: This is a technology that makes graphics processing unit owned by a computer system, flexible by operating several workloads on a single graphics unit.

- Support for Hybrid Configurations: Enables adoption of cloud infrastructure and other e-storage solutions, thus merging both ways of deploying the AI infrastructure; the on-site physically, which is referred to as on-premises and also via the internet in the cloud.

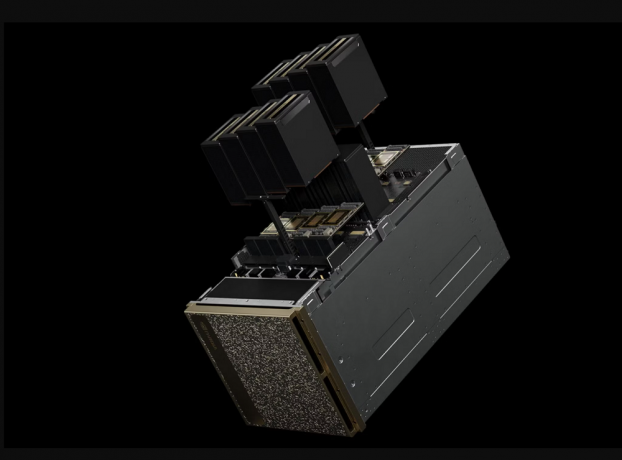

What Are the Key Components of an HGX System?

There are comprising components of the HGX system that operates as a collective system to achieve optimal operational excellence.

- NVIDIA Tensor Core Tis compressed within the HGX platform and have no peer ability in either AI or HPC performance offering.

- NVSwitch: This allows high speed interconnect between the GPUs providing lower latency along with more effective movement of IT resources.

- CUDA-X Software Stack: This is a castle-like architecture within which several tools for building artificial intelligence for NVIDIA devices are enclosed which accelerates the introduction of systems to the market.

- High Bondwidth Networking: Utilizing high data rate communication ports so as to move data between machines in the data center in the shortest possible time.

- Advanced Cooling And Power Management: It ensures that the computer system is cool and operates well even when much computational power is being consumed to be reliable and perform well.

Comparison of NVIDIA DGX and HGX Platforms

What Are the Main Differences Between NVIDIA DGX and HGX?

The most significant distinctions between NVIDIA DGX and HGX platforms includes their structure, intended applications and implementation situations:

- Design Philosophy: The DGX system is marketed as a black box solution, meaning everything is built-in and no configuration or custom engineering is required. On the contrary, the HGX system caters to more flexibility and thus increases the number of possible integration options.

- Target Use Cases: DGX systems, on the other hand, are form-fitted for complete creation and execution of AI in training, testing, and deployment on a single system. HGX systems are often deployed in a situation where massive data centers need AI and HPC applications to be run concurrently.

- Deployment Scenarios: Generally, its clear that DGX systems are more suited to organizations that seek quick solutions with minimum internal arrangements while HGX solutions on the other hand, are supported or sought after in organizations that require interfacing with existing datacenter facilities and need bespoke solution features which are scalable.

How to Choose Between DGX and HGX for Your AI Workloads?

The decision of DGX or HGX depends on several factors:

- Workload Characteristics: In the event of fixed minimal users but highly trained ai workload, DGX systems provide a neatly packaged solution. For variable or mixed workloads however which demand a lot of tailoring, HGX has the requisite provision.

- Scalability Needs: If your organization has the penchant to proliferate its AI infrastructure over a considerable period of time, HGX would be a better pick than ther one because of its modular design which accommodates various configurations. On the other hand, provision for scalability may not be high in the organization, thus it would suffice to have DGX which has inbuilt options.

- Budget Considerations: DGX systems should be much more reasonable with cost for small to medium deployments because of their integrated structure. On the other hand, Concerns to taek into consideration while planning for the actual deployment is the prospects of distrubed power management which DGX units tend to take adtavantage of more within deployment than HGX's modified power management unit,

- Integration Requirements: For such scenarios where seamless interfacing with different types of storage systems and even different cloud environments, this is why clould's hybrid configurations make sense.

Which Platform Offers Better Scalability?

Overall, regarding the scalability perspective, the HGX platform tends to be more adaptable and provides growth possibilities especially when compared to other alternatives. The modular nature of its architecture allows a more fine-grained and tailored addition of resources which is suitable for organizations intending to distribute their AI workloads over several nodes and heterogeneous infrastructures. The inclusion of hybrid configurations also supports the deployment of HGX systems in conjunction with local and cloud-based solutions, allowing for expansion capability. Although DGX systems have the ability to go MSI, such capabilities are hampered by the need to be still all-in-one to accommodate growth.

Deployment Scenarios for DGX and HGX

What Are Common Use Cases for NVIDIA DGX in Enterprises?

Due to their compact and powerful design, NVIDIA DGX systems are widely applied to different enterprise solutions. Engineered for data ingestion, processing, and analytics in a very large scale, one of the use of DGX systems is data analytics which is quite a common application. Enterprises also utilize DGX for NLP tasks such as sentiment analysis and automated customer service which requires large and complex language models. Besides, the rest of the systems are also used in computer visions applications such as image recognition, image classification and face recognition, which are some important aspects of various applications like surveillance so as to enhance quality control.

How Is HGX Deployed in High-Performance Computing (HPC)?

In High-Performance Computing (HPC) instances, HGX platforms are utilized for performing extremely high computational workloads. The modular and scalable structure of HGX makes it very ideal in situations that are highly parallel processing computing intensive. Typical HPC application areas consist of climate simulations, which require enormous computing power because of the stress related to replicating intricate weather conditions for long periods. Also, the application of the HGX systems is present in the area of genomic research, where the processes of genetic deciphering and investigation are carried out at remarkable speed. Scientific studies and simulations like physics, material studies, and others benefit from the hybrid configuration support of HGX as interoperability with computation and storage is achieved with ease.

What Are the Deployment Considerations for AI Applications?

The deployment of applications which utilize AI is subject to a number of requirements conditions that should be satisfied in order to achieve the best results. First, it is necessary to analyze the computational needs of the artificial intelligence models developed, which will determine if a DGX or HGX system will be effective. Next, it should be remembered that infrastructure compatibility is vital; dependency on storage, networking and other components, should be taken into account when commissioning the AI system. Moreover, potential future usage expansion has to be investigated for eliminating future constraints. Finally, initial investments and the cost forecasts for the future have to be considered because there are differences in the operational embedded costs with the DGX and HGX systemsointeraction.

Performance Considerations: DGX vs. HGX

How to Measure the Performance of DGX and HGX Systems?

When assessing the performance of DGX and HGX systems, it is crucial to use a broad approach capable of capturing its different workloads and inherently, these systems. Important form factors that need to be assessed are computational throughput, latency, and efficiency. Physical performance is often reduced to FLOPS – floating point operations per second; or TOPS – Tera operations per second, as commonly used metrics of evaluation. There are also AI application-specific metrics such as MLPerf that can help measure the efficiency of such systems operating under a practical AI workload. Considering the memory bandwidth and the I/O throughput is also important, especially for workload examples which would involve a lot of data processing in a short time. Bottlenecks can be overcome or configurations optimized using performance profiling tools and monitoring software.

What Are the Best Configurations for Optimal DGX Performance?

To maximize the operational efficiency of DGX systems, it is essential to pay careful attention to both hardware and software configurations. Beginning with hardware, one has to ensure that the system is provisioned with up-to-date NVIDIA GPUs and adequate RAM which is required for interfacing with very large data sets. The storage options must also be best suited as far as performance is concerned with regard to high throughput and low latency such as NVMe SSDs. As for the software, it is quite beneficial to make use of the optimized paradigms and libraries such as CUDA, cuDNN, and TensorRT. In addition to regular optimization efforts, further performance improvements can be achieved on the system on specific applications using the DeepStream SDK and RAPIDS suite from NVIDIA. Networking arrangement is also critical with very high-speed interconnects for example Infiniband which offers the required bandwidth and low delay to effectively execute distributed training activities.

How Do Infiniband and NVLink Enhance Data Transfer Speeds?

Infiniband sounds just like NVLink, a technology that allows for faster data transfer exponentiated Einstein machines and AI training. Infiniband is a high-speed networking technology that employs low disclosure and high throughput for the connections and hence enables the high clustering of multiple DGX or HGX systems. It completes tasks that would involve many users accessing dispersed resources needing the data concurrently through its instantaneous transfer across different systems, thus cutting down the time taken to train huge AI systems drastically. NVLink however forms a direct link between the GPUs of one DGX or H supplanting the need to transfer data between them. All inter-GPU communication must go through memory, NVLink saves the need to move data between the GPUs followed increasing time with each Add Memory, enabling exciting new computations and speeding up AllReduce and other shared memory operations. All these technologies guarantee that the computation resources contained in DGX and HGX designations and their units will be applied optimally.

Future Trends in NVIDIA DGX and HGX Technologies

What Innovations Are Expected in the Next Generation of DGX?

The development of new DGX systems is said to include several breakthrough developments which seek to extend the limits of performance and efficiency in AI processing. One such improvement is the adoption of advanced GPU designs such as NVIDIA's Hopper or Lovelace architectures, which significantly enhance the performance and power efficiency matrices. These GPUs will be paired with the latest HBM3 memory that will offer high capacity and speed in data processing. There will also be enhanced NVLink to enable better communication between the GPUs by increasing the communication bandwidth and lowering the latency. Moreover, the augmentation of deep-learning processing units and tensor cores will serve to improve the efficiency of deep learning processes, making it possible to learn bigger and more sophisticated models within shorter durations.

How Will HGX Evolve to Meet Future AI Workload Demands?

The increasing requirements of future AI workloads suggest that further evolutions will be introduced within HGX platforms. In further developments of the HGX system, an implementation of multi-GPU setups is very possible concerning advanced interconnect options such as PCIe Gen 5 and future NVLink, offering low latency and high bandwidth inter-GPU comms. Employing next-gen GPUs with heightened tensor core quantities will enhance the capability of executing AI operations, especially training large deep-learning models. Apart from the hardware revolution, the software will also evolve; the optimized levels of the software fully fitted to the needs of corresponding AI frameworks on the HGX platform will increase efficiency and ease integration and workflow orchestration. Such advancement is all about evolution of the platforms and enables holistically theHGX system and the systems within to satisfy the rising and risky AI models.

What Are the Implications of AI Advancements for DGX and HGX?

The fast progression of AI technologies has some substantial effects on both DGX and HGX systems. Improved hardware specifications permit such systems to confront more and more advanced models, larger datasets, and sophisticated simulating – so new perspectives for AI science and practice are emerging. Industries will be able to address previously unsolvable challenges such as real-time language interpretation, automated driving, healthcare, and finance predictive modeling with appropriate advances. In addition, the combination of next-level hardware and intelligent software enhancement will reduce the AI training and modeling costs and time, thereby broadening the accessibility of these solutions to other industries. So with time, DGX and HGX development will remain an epicenter of technological development in the AI field in one word primary purpose.